Perhaps it doubles the memory access, which is the biggest cost to be fair, but in theory it does not increase the process cost because the framebuffer is 8bpp rather than 16bpp.

Of course, we don't know how VDP1 really works under the hood ...

I think the only difference to VDP1 is that the size of each polygon has increased. The fields, transform parameters, and such are the same.

Another thing to consider on the framebuffer being 8bpp is that all pixels are color bank codes.

Because of that the order of access is simpler than in 4bpp CLUT with gouraud shading.

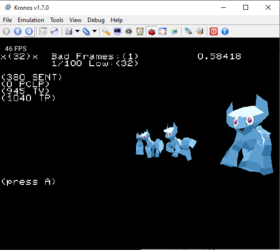

It should be noted that when VDP1 gets near its performance limit in hi-res mode, some polygons begin only being caught in the even scanlines, having their odd scanlines blank. The time in which that starts is quite variable and shows that VDP1 perf isn't really so simple as an estimation of # of polygons. On top of that, polygons nearest the screen are the ones rendered last, thus these are the ones affected first. They are also the biggest, and thus most costly.

In reality, a maximum of 1400 mostly small polygons is not great, but it should be OK.

When I actually implement this we'll see how strong a rationale there is for the preclipping disable stuff that XL2 figured out.

It'll probably go back in.